Claude

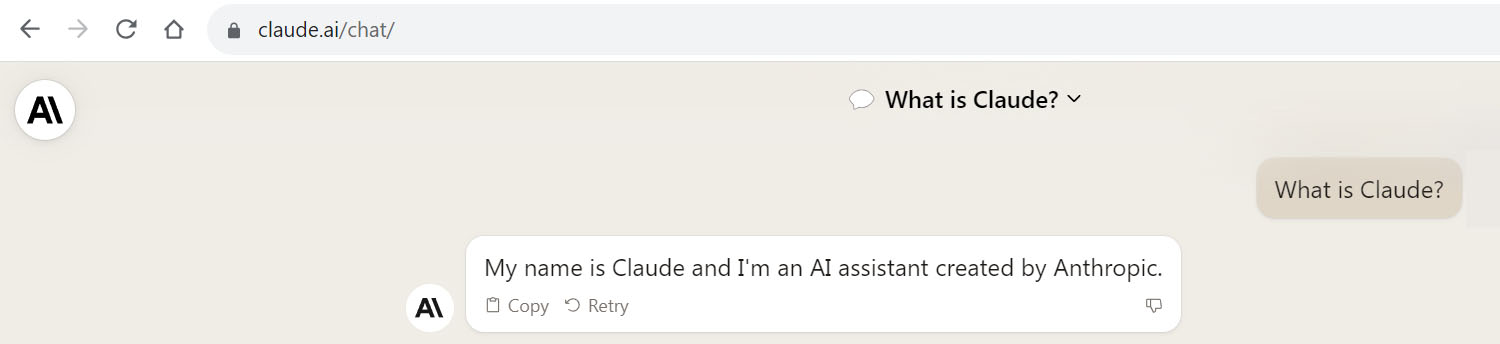

Claude screenshot

Claude is an artificial intelligence conversational agent designed by researchers at Anthropic to be helpful, harmless, and honest. The goal is to create an AI assistant that can provide useful information to users while avoiding harmful or unethical behavior.

Some key features of Claude include:

- Trained using Constitutional AI, Anthropic's methodology for AI safety and alignment. Incorporates techniques like preference learning, damage mitigation, and indifference to harmless mistakes.

- Able to communicate using natural language and have open-ended dialogues. Built using a large language model architecture.

- Provides contextual responses to questions, summarizes information, admits mistakes or ignorance honestly when needed.

- Designed to avoid deception, harm, or unwanted/dangerous behavior through its model architecture and incentives.

- Can improve its capabilities safely over time based on human feedback. Users can correct Claude.

The motivation behind Claude is to develop an AI assistant that dynamically learns to be helpful for users while resisting misdirection. Its Constitutional AI training provides top-down constraints for safety. Claude represents an ambitious attempt to combine cutting-edge AI conversational ability with robust alignment to human values.