Representation learning

Representation learning is a set of techniques in machine learning where a system automatically discovers the representations needed for a particular task. Traditional machine learning algorithms often require manual feature engineering, where domain experts need to identify the relevant features for a given problem. In contrast, representation learning aims to automate this process, allowing the model to learn the optimal features or representations from the raw data itself.

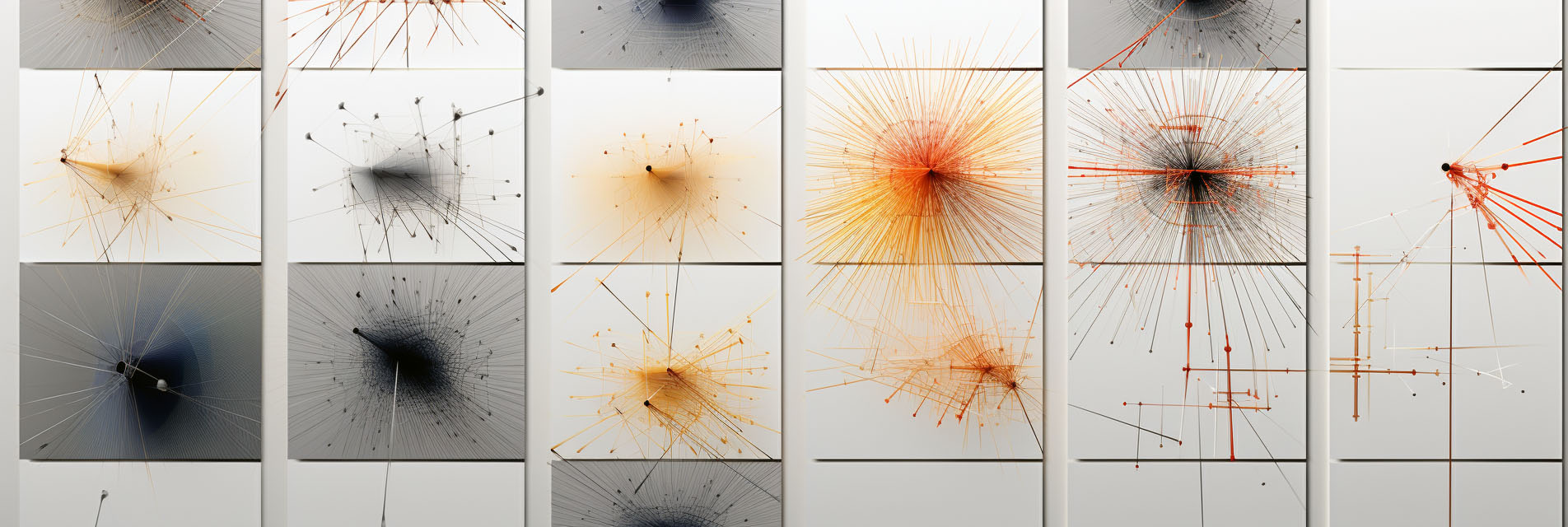

In the context of deep learning, representation learning is often associated with the ability of neural networks to automatically learn hierarchical features. For example, in a convolutional neural network (CNN) trained for image classification, the initial layers might learn to detect edges and textures, while deeper layers learn to identify more complex structures like shapes and objects. These learned features are then used for the final task, such as classifying the image into different categories.

Representation learning is particularly useful in domains where manual feature engineering is challenging or impractical. For instance, in natural language processing, models like Word2Vec or BERT learn dense vector representations of words, capturing their semantic meaning and relationships with other words. These representations can then be used for various tasks like text classification, sentiment analysis, and machine translation.

Unsupervised learning techniques, such as autoencoders and generative models, are also commonly used for representation learning. These models learn to encode the input data into a lower-dimensional form and then decode it back into the original data. The learned encodings often capture the essential characteristics of the data, making them useful for tasks like anomaly detection or clustering.

However, representation learning is not without challenges. One issue is that the learned representations are often specific to the task and data they were trained on, limiting their generalizability. Another challenge is interpretability; the features learned by deep neural networks are often difficult to understand, raising concerns in applications where explainability is crucial.

Representation learning is a subfield of machine learning focused on automatically discovering the optimal features or representations needed for a specific task. It eliminates the need for manual feature engineering and is a key factor behind the success of deep learning models in various domains. While powerful, it also presents challenges related to generalizability and interpretability.