Token

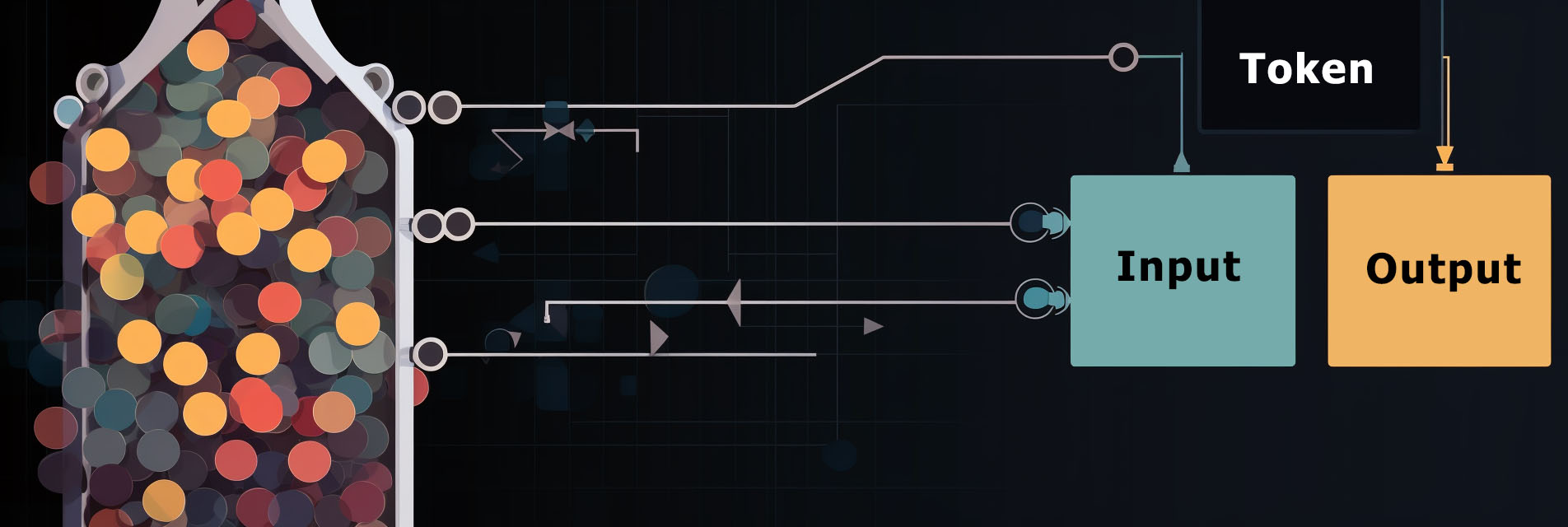

A token in AI refers to a discrete linguistic unit, like a word, that serves as an input or output for machine learning models. Here are a few key things about tokens in AI:

- Tokens are the basic building blocks for natural language processing (NLP) tasks. Sentences and documents are broken down into smaller tokens before being fed into NLP models.

- Common token types include words, characters, subword units, and byte pairs. The choice of token type impacts how much information is preserved from the original text.

- Tokens are numerically represented as vectors or embeddings before going into a model. This numerical representation encodes the meaning of each token based on patterns learned from large amounts of text data.

- During training, an NLP model learns relationships between tokens based on their embeddings to perform tasks like translation, text generation, or question answering.

- Pretrained token embeddings from large neural networks like BERT and GPT-3 capture semantic and syntactic information about language that can be transferred to downstream tasks.

- Special token types like CLS and SEP are used in transformer models like BERT to provide additional context.

Tokens are the basic units of text that are encoded numerically and used as inputs and outputs for NLP models to understand language statistically. The choice of tokenization impacts model performance.