Transformer

Transformers are a type of neural network architecture that have revolutionized the field of artificial intelligence (AI). They were first introduced in the paper "Attention Is All You Need" in 2017, and they have since been used to achieve state-of-the-art results on a wide range of AI tasks, including:

- Natural language processing (NLP): Transformers are now the standard architecture for NLP tasks such as machine translation, text summarization, and question answering.

- Computer vision: Transformers are also being used to improve the performance of computer vision tasks such as image classification, object detection, and image segmentation.

- Speech recognition and synthesis: Transformers are also being used to improve the performance of speech recognition and synthesis systems.

Transformers are able to achieve such good results because they are able to learn long-range dependencies in sequential data. This is important for many AI tasks, such as machine translation, where the meaning of a word can depend on words that are far away in the sentence.

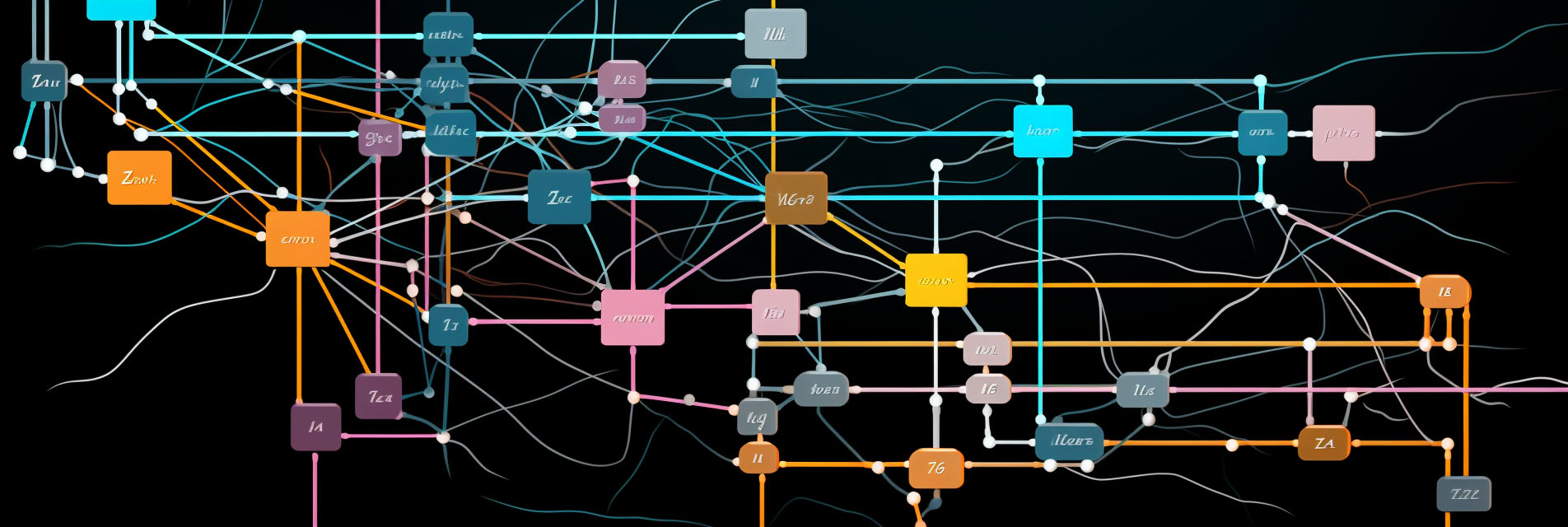

Transformers work by using a self-attention mechanism. This mechanism allows the model to learn how to attend to different parts of the input sequence. This is in contrast to previous neural network architectures, such as recurrent neural networks (RNNs), which can only attend to the most recent inputs.

Transformers have also been shown to be very efficient to train and deploy. This makes them a practical choice for a wide range of AI applications.